Don’t worry, I’m not going to talk about blockchain or geopositioning, but a more boring and basic topic: hash and hash map functions.

In this post, I will describe you a real case in Nextail, where the change of a single line of code related to a hash function changed the performance of an application, both for CPU consumption and memory.

A friend suggested me to title the post with “How to save thousands of dollars to your company by changing a single line of code”, but as I have not been able to quantify it objectively I have opted for a title with less clickbait.

Hash functions are a basic tool in technology, with multiple practical applications. It is essential to know how they work, and the implications that selecting a hash function may have.

The problem

At Nextail we handle a large volume of data, but the basic domain on which they apply is limited: countries, cities, shops, products, product references, etc. On average in a customer, the number of countries, cities or stores do not usually exceed the order of thousands, and product references hundreds of thousands.

When you save all these entities in a database and you use a primary key generated by a sequence, identifiers are integers ranging from 1 to N, being N a relatively low number compared to the range of 32-bit positive integers (from 0 to 2,147,483,647).

In business logic, it is usual to have objects that relate two entities of your domain, for example, the stock or sales of a product in a particular store.

In a database that information can be stored in a table, and to quickly locate data of a product in a store we create an index composed by the identifiers of the store and the product. In business logic when you have all that information in memory as objects, in order to be able to work with all of them, it is usual for those objects to end up in a map or dictionary.

The use of this data structure becomes critical because of the number of operations that depend on it.

A bad choice of the hash function to apply on a map can penalize the memory consumption and performance of your application.

To understand the problem of these composite indices in a map we will first review the data structure.

The source code with examples and utilities for performing metrics can be found in this github repository.

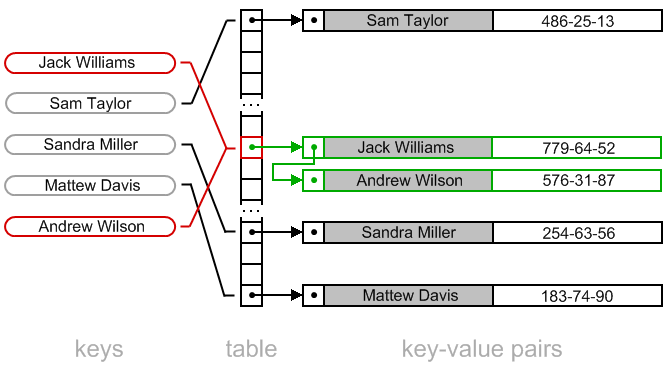

Map

The most efficient way to implement a Map is through hash tables, and an important point for a hash table to perform well is the hash function to apply to each key.

In Java the most commonly used implementation is HashMap (avoid HashTable, one of Java’s youthful errors). If the order in which you iterate keys is important you can use a TreeMap, but if you’re worried about concurrency, you must use a ConcurrentHashMap.

In any case, Java only requests two things to the object you use as map key:

- It must reimplement the method hashCode() which inherits from the Object class: if it is not reimplemented, it will use something that depends on the instance’s memory address. Therefore, two identical instances in data will have different

hashCodevalues. - It must reimplement the method equals() which inherits from the Object class: if it is not reimplemented, it will compare instance references, so it will also happen that two identical instances (containing same data) will not be considered equal.

Therefore, if you don’t reimplement either method you are introducing bugs in your application.

Which value is printed in console when you execute the following code? Which is the expected value?

public class Person {

private Integer id;

private String name;

public Person(Integer id, String name) {

this.id = id;

this.name = name;

}

}

public static void main(String[] args) {

Map<Person, Integer> map = new HashMap<>();

map.put(new Person(1, "Alberto"), 35);

map.put(new Person(2, "Ana"), 28);

//more operations and in a place away from previous code...

map.put(new Person(1, "Alberto"), 36);

System.out.println(map.size());

}

The expected result is to have 2 objects in the map, but we find 3 because the second time we add Alberto with id = 1, map will not consider that it already exists and will create another entry in the map.

How you implement hashCode and equals functions will depend on your business logic and on how you define the identity of your object, but you should use the same attributes that would define your primary key if it were in a database.

With the help of our favorite IDE we can easily generate all this verbose code, but necessary:

public class Person {

private Integer id;

private String name;

public Person(Integer id, String name) {

this.id = id;

this.name = name;

}

public int hashCode() {

return Objects.hash(id);

}

public boolean equals(Object obj) {

if (this == obj)

return true;

if (obj == null)

return false;

if (getClass() != obj.getClass())

return false;

Person other = (Person) obj;

return Objects.equals(id, other.id);

}

}

I invite you to perform the same test in JavaScript, the result will surprise you, or not… :)

The hash function

In this example I use as hash function the code generated by the IDE, which uses the criteria proposed by Java best practice. Although it would be better and simpler to return the id directly.

The method calls this code from the Arrays class, which is the standard to calculate the hash of a compound key:

public static int hashCode(Object a[]) {

if (a == null) return 0;

int result = 1;

for (Object element : a)

result = 31 * result + (element == null ? 0 : element.hashCode());

return result;

}

To reach the greatest dispersion of the keys, the method iterates the elements that compose the key multiplying by 31 the previous value and adding the hashCode of the iterated value.

To understand the reasons to use 31, better quote Joshua Bloch in his must-read book Effective Java:

The value 31 was chosen because it is an odd prime. If it were even and the multiplication overflowed, information would be lost, as multiplication by 2 is equivalent to shifting. The advantage of using a prime is less clear, but it is traditional. A nice property of 31 is that the multiplication can be replaced by a shift and a subtraction for better performance:

31 * i == (i << 5) - i. Modern VMs do this sort of optimization automatically.

Just because this is the default hash function in Java doesn’t mean it’s the best in all circumstances. In most cases, it provides satisfactory results and meets the requirements that are demanded to a hash function, but in some cases we will have to reconsider its suitability.

Collisions

One of the most important properties of a hash function is that the result must be uniform:

A good hash function should map the expected inputs as evenly as possible over its output range. That is, every hash value in the output range should be generated with roughly the same probability.

In this way, the number of matching values is minimized and the hash table implementation has to work less on relocating keys with the same hash value.

How to solve this is one of the areas where different hash tables implementations do most effort and it is the source of many papers. Depending on how collisions are distributed (therefore your hash function), a hash table implementation can go from having a cost of O(1) to one of O(n) for insertion or query operations.

Analysis of the Java standard hash function

Going back to what I said at the beginning: what happens when we analyze the behavior of the Java standard hash function on objects with the following characteristics?

- They are objects that store information about two related entities, and therefore their key (and hash function) is composed of two values.

- Keys are a subset of Integer values, ranging from 0 to N, being N a relatively low value.

An object representing it can take this shape:

public class MyObject {

private MyObjectKey key;

private String someAttribute;

public MyObject(int firstKey, int secondKey, String someAttribute) {

this.key = new MyObjectKey(firstKey, secondKey);

this.someAttribute = someAttribute;

}

public MyObjectKey getKey() { return key; }

public String getSomeAttribute() { return someAttribute; }

public int hashCode() { return key.hashCode(); }

public boolean equals(Object obj) { ... }

}

public class MyObjectKey {

private int firstKey;

private int secondKey;

public MyObjectKey(int firstKey, int secondKey) {

this.firstKey = firstKey;

this.secondKey = secondKey;

}

public int getFirstKey() { return firstKey; }

public int getSecondKey() { return secondKey; }

public int hashCode() {

int result = 31 + firstKey;

return 31 * result + secondKey;

}

public boolean equals(Object obj) { ... }

}

A simplified use of this class with a Java Map could be:

Map<MyObjectKey, MyObject> map = new HashMap<>();

//Or loaded from database:

MyObject obj1 = new MyObject(1311, 313, "Madrid");

MyObject obj2 = new MyObject(1332, 313, "Barcelona");

MyObject obj3 = new MyObject(1311, 928, "Zaragoza");

....

....

map.put(obj1.getKey(), obj1);

map.put(obj2.getKey(), obj2);

map.put(obj3.getKey(), obj3);

If we study the Map class internals we see that it doesn’t use the value returned by your hash function directly, but applies one more transformation to improve the dispersion of the function:

static final int hash(Object key) {

int h;

return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16);

}

What happens if we load in memory all the objects whose key satisfies the following values?

- firstKey: from 0 to 50,000, which could represent the identifiers of my products

- secondKey: from 0 to 1,000, which could represent the identifiers of the stores

We are loading 50 million objects in the pessimistic case in which all products are present in all stores.

If we analyze the number of collisions with a code that reproduces the calculations, and the collisions shape of loading all those records into a HashMap:

for (int i = 0; i < n_products; i++) {

for (int j = 0; j < n_stores; j++) {

int hashValue = HashMap.hash(Objects.hash(i, j));

histogram.add(hashValue);

}

}

You can see the following:

- 399,744 times occurs that 33 instances collide with each other

- 1,149,303 times occurs that 32 instances collide with each other.

- 62 times it occurs that 31 instances collide with each other

- 62 times it occurs that 30 instances collide with each other

- 62 times it occurs that i < 31 instances collide with each other until i = 1

In other words, 99.93% of the time any combination of a product and a store will have a collision with at least 31 other values.

Which are the consequences of this?

- Stop behaving like a HashMap and it seems more a Tree

- Tree structure consumes more memory

- Makes intensive use of the

equalsfunction of the key

In summary: there is a large consumption of memory and CPU.

How does HashMap resolve collisions?

Internally HashMap defines an array of nodes of a certain size that varies according to the total number of elements. To the value resulting from applying the hash function, HashMap divides it by the current size of that array and takes the rest of the division.

Therefore, we must not only take into account the number of collisions of the hash function, and we must also take into account the number of collisions in the distribution of hash values in the array.

If two keys have the same result applying the hash function, they will be placed in the same position of the array. HashMap uses closed addressing technique with open hashing: each array element contains a linked list of nodes where it appends, as collisions arrive at that position of the array.

Each Node in the list contains: the Key, the Value, the Key hash value and a reference to the next element in the list.

If the hash function is good enough, the number of elements in lists will be low, and the number of chains will also be low. But if we are unlucky enough to have many collisions, the HashMap implementation changes its strategy and instead of managing a linked list with O(n) complexity, it changes to a red-black tree with O(log n) complexity.

This behavior was introduced in Java 8 to improve performance in a transparent way for us, keeping backward compatibility as we are used to.

In HashMap, the necessary number of collisions in the same bucket to become a tree is 8. If we return to my example, this value is reached in 99.99% of the cases. Whenever we need to access the map, we will have to go through a tree.

Memory consumption

Because HashMap has to switch to a tree structure in 99.99% of buckets, memory consumption increases. The array bucket becomes a TreeNode, which extends the Node class and adds 6 more pointers to manage the balanced red-black tree structure.

Depending on the amount of memory assigned to a running JVM, it will use either 32-bit or 64-bit pointers, so all attributes of reference type will consume up twice as much space. The memory consumed by an instance of Node and TreeNode depending on pointers size is:

| 32 bits | 64 bits | |

|---|---|---|

| Node | 32 bytes | 48 bytes |

| TreeNode | 56 bytes | 104 bytes |

In my case, it is very common to launch processes with more than 32GB of memory, and I am required to use 64-bit addressing. The difference between the linked list version and the tree one is more than twice as much memory.

Besides this, we must add HashMap’s management of the internal array: its size varies depending on the number of elements stored in the HashMap, without any relation on how its content is distributed by its collisions. Therefore, the more content the HashMap has, the larger the array will be, trying to distribute all its elements along the array (unsuccessfully if your hash function is inadequate).

If the number of collisions is too high, the elements will concentrate on a few positions in the array, while the rest will be empty. If we return to my example with 50,000 products and 1,000 stores, a HashMap containing the Cartesian product using the standard hash function with 32-bit memory addresses, we notice that:

- There are 49,997,768 TreeNode instances that consume 2,799,875,008 bytes

- There are 2,232 Node instances that consume 71,424 bytes

- The array has 67,108,864 positions that consumes 268,435,456 bytes:

- 496 contain elements of type Node

- 1.550.473 contain elements of type TreeNode

- 65,557,895 of the positions are empty, 97.69% of them!

We have a map that consumes 2.86 GB of memory, without taking into account the space used by the instances that serve as key nor the associated value. With 64 bits addressing the map consumes 5.34GB, 85% more. This highlights that almost all the information of a TreeNode are pointers.

CPU Consumption

When you query or insert a value in a HashMap and reach a collision, you have to go through the list of items searching if any of the items match in their hash value and in the identity of the key. To know if two keys are identical we must use the equals function that you should have redefined.

Therefore in each access operation, it may have to perform up to 8 comparisons if it still uses a list, or Log2(N) if it has changed to a tree, where N is the average number of elements in the trees.

By switching to a balanced tree, HashMap is able to keep down the cost of finding the value in the open hashing, at the price of consuming more memory.

If we come back to the numbers of my example, we find that in order to insert 50 million elements, the equals method of the Key class is called 942.272.996 times: about 19 times per inserted element. As a reference, on my computer, it takes 120 seconds to create the map.

When I profiled the application at Nextail, I was very surprised that a large percentage of the run time was spent on the equals function. The first thing I thought was that we had misimplemented it, wasting time to understand what was wrong with its implementation (the Java standard). Until I realized that there was nothing wrong: it was just called too many times. This was the key moment to understand that we had a problem with its hash function.

I still don’t have enough knowledge to measure it and reach conclusions, but I’m sure that converting Node objects to TreeNode has an impact on the garbage collector, and that larger objects should also penalize the use of CPU cache lines.

The solution

As I said at the beginning, changing a single line of code can lead to a radical change in the resource consumption of your application. In this case, the line of code is located in the implementation of the hashCode method:

public class MyObjectKey {

public int hashCode() {

return (firstKey << 16) + secondKey;

}

}

Which is the key of my solution? Exactly the origin of the problem: objects identifiers are values that are within a low range of integers and are consecutive values, with low dispersion. The results of the hash function end up concentrated in a small range of integers.

The hash function must return a 32-bit integer, but the values involved in its calculation are integers that do not exceed 16 bits of information, therefore we can compose a 32-bit integer, using 16 of them in the most significant part of the integer and the other 16 in the least significant part of the integer. This will guarantee that the hash function will never return two colliding values, minimizing the number of collisions within the array.

Depending on the application domain, if one of the identifiers takes values higher than 65,535, you can balance the number of bits, using more for one value and less for the other. For example, using 20 for product identifier and 12 bits for stores identifier we would have: (firstKey << 12) + secondKey

- 220 = 1,048,576 possible products

- 212 = 4,096 possible stores

If both ranges are not large enough for the domain identifiers we will have collisions, but it would still be much less compared to the default hash function.

The numbers

Given this new hash function, how does HashMap behave? With the same 50,000 products and 1,000 stores, a HashMap containing the Cartesian product using this new hash function, with 32-bit memory addresses we have:

- No TreeNode instance exists

- There are 50,000,000 instances of type Node that consume 1,600,000,000 bytes

- The array has 67,108,864 buckets consuming 268,435,456 bytes:

- 50,000,000 of them contain elements of type Node

- 17,108,864 of them are empty

Therefore, each Node instance is located in a different array position, and there are no internal collisions in the array. The array size is 2²⁶, the power of two immediately superior to the 50 million records.

In total, we are consuming 1.74 GB of memory in the 32-bit version, while in the 64-bit version it is 2.74 GB.

| 32 bits | 64 bits | |

|---|---|---|

| Optimized | 1,74GB | 2,74GB |

| Standard Hash | 2,86 GB | 5,34 GB |

| % memory | +64% | +95% |

Regarding CPU consumption, it takes 80 seconds to create the same HashMap, compared to 120 in the suboptimal version (50% more time!). The number of invocations to the Key equals function becomes zero. Because the hash function always returns a different value, it doesn’t need to verify the equality of objects.

Conclusion

The Java Collections Framework is one of the platform’s strengths because it helps us to standardize the code and saves us the trouble of building our abstract data types libraries.

But it has made our life so easy that it has made us lazier (myself included) and we stop paying attention to them. Many people don’t know the difference between a HashSet and a TreeSet, or which properties a LinkedHashMap has versus a HashMap (a classic in job interview questions).

To become productive, in addition to the syntax of a language we must also master the libraries that compose its core.

Without going crazy reviewing your entire code base (you already know that Premature optimization is the root of all evil), take always into account the nature of the data you are managing and algorithms complexity. In my case understanding how the hashCode method is applied on a HashMap was critical to fixing a performance problem.

. . .

The example I have used has been explanatory, and it is pessimistic using the Cartesian product of products and stores, and in addition, all identifiers are in a very dense range of numbers. Problems that I solve on my day-to-day basis, it is not like that: it’s a subset of that Cartesian product and the identifiers are more dispersed, but not enough to get rid of the collision problem and be manifest in performance analysis.

The example could have been better solved with a two-dimensional matrix, or using other data structures that are better suited to this type of problem. In a next post, starting from the same problem, I will make an analysis to compare between Map<MyObjectKey, MyObject> and Map<Integer, Map<Integer, MyObject>>.

As usual, any clarification and feedback will be welcome in the comments!