Parquet is a widely used format in the Data Engineering realm and holds significant potential for traditional Backend applications. This article serves as an introduction to the format, including some of the unique challenges I’ve faced while using it, to spare you from similar experiences.

Introduction

Apache Parquet, released by Twitter and Cloudera in 2013, is an efficient and general-purpose columnar file format for the Apache Hadoop ecosystem. Inspired by Google’s paper “Dremel: Interactive Analysis of Web-Scale Datasets”, Parquet is optimized to support complex and nested data structures.

Although it emerged almost simultaneously with ORC from Hortonworks and Facebook, it appears that Parquet has become the most used format.

Unlike row-oriented formats, Parquet organizes data by columns, enabling more efficient data persistence through advanced encoding and compression techniques.

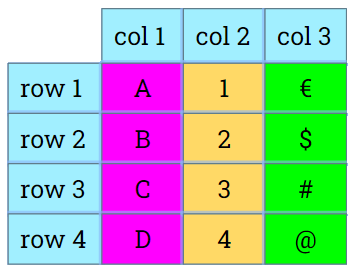

Logical table

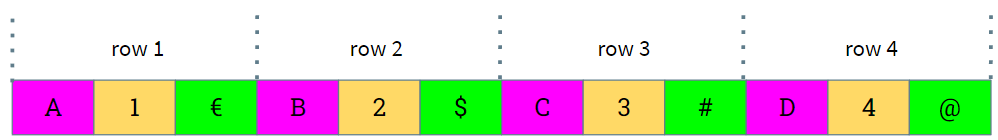

Row Oriented storage:

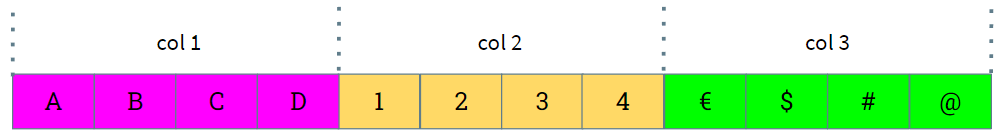

Column Oriented Storage:

When it comes to reading time, Parquet’s columnar data organization proves beneficial. If there’s no need to access every column, this arrangement allows you to bypass reading and processing numerous data blocks, significantly enhancing efficiency.

Rule number 1 of data engineering: the fastest data is the one you don’t read

— javi santana (@javisantana) November 18, 2023

Thousand of CPU hours wasted reading and filtering data that should never have been read.

Columnar data formats are frequently employed in analytical database systems (such as Cassandra, BigQuery, ClickHouse, and QuestDB) and in large dataset processing systems like Apache Arrow and ORC.

Format

Data in Parquet is stored in binary form, making it unreadable when printed on the console, unlike other text-based formats like JSON, XML, or CSV. The binary storage format of Parquet offers benefits in terms of efficiency and performance, but lacks the human-readable aspect inherent to text-based data formats.

Given that Parquet is designed to handle vast volumes of data, it is practical to include the schema information directly within the files, as well as additional statistical metadata. This feature is particularly advantageous for working with files when their schema is not previously known. Each Parquet file inherently contains all the necessary details to deduce its schema, enabling seamless data reading and analysis, even when dealing with unknown or variable data structures.

Parquet supports basic data types, with the ability to extend them through logical types, giving them their own semantics:

- BOOLEAN: 1-bit boolean (boolean in Java)

- INT32: 32-bit signed integer (int in Java)

- INT64: 64-bit signed integer (long in Java)

- INT96: 96-bit signed integer (no direct equivalent in Java)

- FLOAT: 32-bit IEEE floating-point value (float in Java)

- DOUBLE: 64-bit IEEE floating-point value (double in Java)

- BYTE_ARRAY: byte array of indeterminate size

- FIXED_LEN_BYTE_ARRAY: fixed-size byte array

More complex data types such as Strings, Enums, UUIDs, and different kinds of date formats can be efficiently constructed using these foundational types. This ability demonstrates the flexibility of Parquet in accommodating a wide range of data structures by building upon basic data types.

The format supports persisting complex data structures, lists, and maps in a nested manner, which opens the door to storing any type of data that can be structured as a Document.

Historically, collections can be represented internally in multiple ways, depending on how you want to handle the possibility of a collection being null or empty. Additionally, as an implementation detail, how to name each element of the collection can vary between implementations of the format. These variations have led to different utilities in various languages generating files with differences that make them incompatible.

The official representation of collections in Parquet is now defined, yet many tools still generate files with legacy formats to preserve backward compatibility. This requires an explicit configuration to ensure files are written in the standardized format. For instance, when using Pandas with PyArrow, the use_compliant_nested_type option must be enabled, while in Java Avro Parquet, the WRITE_OLD_LIST_STRUCTURE_DEFAULT flag should be disabled.

While Parquet utilizes an IDL to define the data format within a file (its schema), it lacks a direct, standard tool for generating Java code from an IDL to facilitate the serialization and deserialization of Parquet data in simple Java classes.

Parquet inherently includes data compression within its encoding process. It automatically applies techniques like Run-length encoding (RLE) or Bit Packing. Additionally, it offers the option to compress data blocks with compressors such as Snappy, GZip, or LZ4, and to use Dictionaries for the normalization of repeated values.

Snappy compression is typically employed as the default, due to its favorable balance between compression efficiency and CPU time consumption.

When serializing or deserializing large amounts of data, Parquet allows us to write or read records one at a time, obviating the need for retaining all data in memory, unlike Protocol Buffers or FlatBuffers. You can serialize a stream of records or iterate through a file reading records one by one.

Documentation

Despite the format’s significance in the world of Data Engineering, documentation on its basic use is quite scarce, particularly in the context of Java.

How would you feel if, to learn about how to read or write JSON files, you had to go through Pandas or Spark and it wasn’t straightforward to do it directly? That’s the sensation you get when you start studying Parquet.

High-level tools commonly used by Data Engineers, such as Pandas and Spark, offer built-in methods to seamlessly export and import data to Parquet (and other formats), simplifying the process by hiding the underlying complexities. However, finding documentation and examples for using Parquet independently of these tools is challenging, as the information is scattered across multiple articles written by different individuals.

What would you say if, to read or write JSON files, you had to go through other tools/formats such as Avro or Protocol Buffers, and there was no library that supported it directly? This is the scenario encountered with Parquet.

The absence of a straightforward library specifically for Parquet files, necessitating the use of third-party libraries for other format serializations, makes it more challenging to become proficient with Parquet.

Libraries

The Parquet library in Java does not offer a direct way to read or write Parquet files. Just as the Jackson library handles JSON files or the Protocol Buffers library works with its own format, Parquet does not include a function to read or write Java Objects (POJOs) or Parquet-specific data structures.

When working with Parquet in Java, there are two main approaches:

- Using the low-level API provided by the Parquet library (this would be equivalent to processing the tokens of a JSON or XML parser).

- Employing the functionalities of other serialization libraries, like Avro or Protocol Buffers.

Among the libraries that make up the Apache Parquet project in Java, there are specific libraries that use Protocol Buffers or Avro classes and interfaces for reading and writing Parquet files. These libraries employ the low-level API of parquet-mr to convert objects of Avro or Protocol Buffers type into Parquet files and vice versa.

To summarize, while working with Parquet in Java, you’ll engage with three types of classes, each associated with three different APIs:

- The API of your chosen serialization library, which provides the way to define serialized Objects and interact with them.

- The API of the wrapper library for the serialization library you have chosen, with the readers and writers for these Objects.

- The API of the

parquet-mrlow-level library itself that defines common interfaces and configurations, and handles the actual serialization process.

The most user-friendly and flexible library, often encountered in internet examples, is Avro. However, Protocol Buffers is also a viable option.

The use of utilities from these formats does not imply that the information is serialized twice, first through an intermediate format. Instead, it involves reusing the classes generated by Avro or Protocol Buffers, which hold the data to be stored. Each wrapper implementation uses the Parquet MR API.

Abstraction Over Files

The Parquet library is agnostic to the location of the data - it could be on a local file system, within a Hadoop cluster, or stored in S3.

To provide an abstraction layer for file locations, Parquet defines the interfaces org.apache.parquet.io.OutputFile and org.apache.parquet.io.InputFile. These interfaces contain methods to create specialized types of Output and Input Streams for data management.

For those interfaces, it provides an implementation responsible for implementing access to files in Hadoop, SFTP, or local files:

These implementations, in turn, require to reference files using the org.apache.hadoop.fs.Path class, which is unrelated to the Java Path class, along with an org.apache.hadoop.conf.Configuration.

To reference a file, we would need to write code like this:

Path path = new Path("/tmp/my_file.parquet");

OutputFile outputFile = HadoopOutputFile.fromPath(path, new Configuration());

InputFile inputFile = HadoopInputFile.fromPath(path, new Configuration());

But fortunately, this won’t last long, as a second implementation (that allows working with local files only) has recently been merged to master, and it is being decoupled from the Hadoop configuration class. No release has yet included this, but it is expected to be available in version 1.14.0.

Dependencies

One of the major drawbacks of using Parquet in Java is the large number of transitive dependencies that its libraries have.

Parquet was conceived to be used in conjunction with Hadoop. The project on GitHub for the Java implementation is named parquet-mr, and mr stands for Map Reduce. As you can see, the package of the file classes refers to hadoop.

Over time, it has evolved and become more independent, but it has not been able to completely decouple from Hadoop and still has many transitive dependencies from the libraries used by Hadoop (ranging from a Jetty server to a Kerberos or Yarn clients).

If your project is going to use Hadoop, all those dependencies will be necessary, but if you intend to use standard files outside of Hadoop, it makes your application heavier. I suggest excluding those transitive dependencies in your pom.xml or build.gradle.

In addition to including a lot of unnecessary code, this can pose a problem when resolving conflicts with versions of transitive dependencies that you are also using.

If you don’t exclude any dependencies, you might end up with more than 130 JARs and 75 MB in your deployable artifact. However, by selectively excluding unused dependencies, I’ve been able to trim this down to just 30 JARs, with a total size of 23 to 29MB.

As previously noted, efforts are underway to address this, but it’s not all ready yet.

Conclusion

The Parquet format stands as a crucial tool in the Data Engineering ecosystem, providing an efficient solution for the storage and processing of large volumes of data.

Although its adoption has been solid in Big Data environments, its potential transcends this field and can also be leveraged in the world of traditional Backend.

Its column-oriented design, combined with advanced data compression and complex data structuring capabilities, make it a robust option for those seeking to enhance performance and efficiency in handling data within traditional Backend environments.

Despite its indisputable advantages, the adoption of Parquet as a data interchange format in Java application development faces obstacles, primarily due to the complexity of its low-level API and the lack of a high-level interface that simplifies its use. The need to rely on third-party libraries adds an additional layer of complexity and dependencies, and the scarcity of accessible documentation and concrete examples poses a significant barrier for many developers.

This post has been an introduction to the format, its advantages, and the WTFs I’ve encountered along the way; don’t be discouraged. With this foundational knowledge, the forthcoming posts will focus on how to work with Parquet using different libraries: